Evolution of Programming Languages and Data Representation and Storage

Unit I: Introduction to Computing

Programming in C

Evolution of Programming Languages

What is Code?

In computing, "code" refers to a set of instructions written in a programming language that tells a computer what to do. It consists of statements, expressions, and other constructs that are used to create programs.

Early History of Programming

Mid-19th Century: The Foundation

Charles Babbage conceptualized the idea of a programmable mechanical computer, known as the Analytical Engine. Although Babbage's design was never completed, it laid the foundation for modern computing.

Babbage's Analytical Engine design

Punched cards used for programming the Analytical Engine

Ada Lovelace: First Programmer

Ada Lovelace, often considered the world's first computer programmer, wrote an algorithm for the Analytical Engine in the mid-19th century. Her work on the engine's programming is considered pioneering in the field of computing.

Ada Lovelace (1815-1852), Mathematician and Writer

Mid-20th Century: Electronic Era

The development of electronic computers led to the creation of early programming languages such as assembly language and machine code. These languages directly corresponded to the instructions executed by the computer's hardware.

Machine Language & Communication

Machine Code

Computer is a machine and understands machine language only, consisting of only 0s and 1s in binary that a computer can understand.

11000000 10101010

01010101 11110000

In the real world we communicate using different languages. Similarly, each programming language has its own collection of keywords and syntax for constructing instructions.

Why So Many Languages?

- Writing machine (binary) code is difficult for humans

- Programming languages provide convenient, human-readable way

- Different languages serve different purposes

- Evolution driven by specific needs and improvements

Compilation Process

Programming languages use Compiler or Interpreter to convert high-level language to low-level language that machines can understand.

Five Generations of Programming Languages

First Generation

Machine Languages

- Simplest type of computer language

- Uses binary code (0s and 1s)

- Interfaces directly with hardware

- Machine-specific applications

- Only executes on original hardware

Second Generation

Assembly Languages

- Human-readable mnemonics

- Easier than binary code

- Requires assembler for conversion

- Used for operating systems

- Device drivers development

Third Generation

Procedural Languages

- High-level programming languages

- Human language-like syntax

- Examples: C, C++, Java, FORTRAN, PASCAL

- Requires compiler or interpreter

- More user-friendly

Advanced Generations (4GL & 5GL)

Fourth Generation Languages

Focus on WHAT to do

- Everyday human language syntax

- Focus on tasks, not implementation

- Database handling

- Report generation

- GUI development

Fifth Generation Languages

Visual Programming & AI

- Latest stage in programming evolution

- Visual programming tools

- Constraint-based logic

- Define goals, system generates code

- Artificial Intelligence focus

Classification Summary

Low-Level Languages: First two generations (1GL, 2GL)

High-Level Languages: Next three generations (3GL, 4GL, 5GL)

Programming Language Levels

Low-Level

Machine Language

No AbstractionMid-Level

Assembly Language

Less AbstractionHigh-Level

Human-like Languages

Higher AbstractionHigh-Level Languages

High-level language is a computer language which can be understood by users. It's very similar to human languages and has a set of grammar rules that make instructions more easily understandable.

Abstraction Concept

Machine language provides no abstraction, assembly language provides less abstraction, and high-level languages provide higher abstraction by hiding internal implementation details.

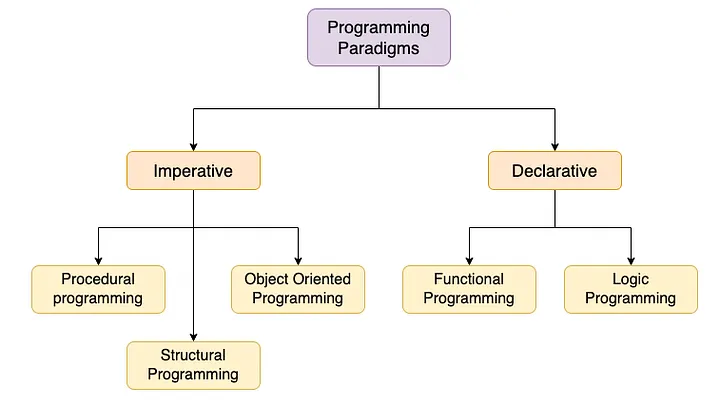

Programming Paradigms

Imperative Paradigm (Focus on HOW)

The oldest and most basic programming approach. Code describes a step-by-step process for program execution. Developers focus on how to get an answer step by step.

Examples: Java, C, Python, Ruby

- More readable and complex

- Easy customization

- Step-by-step instruction focus

Classification of Programming Paradigms (Source: Medium)

Declarative Paradigm (Focus on WHAT)

Writing declarative code forces you to ask first what you want out of your program rather than how to achieve it.

Examples: SQL, Haskell, Prolog

- Better optimization

- Focus on desired outcome

- Less concerned with implementation

Multi-Paradigm Support

Over the years, some imperative languages have received updates allowing them to support declarative-style programming. Examples: Java, Python

Future of Programming

Paradigm Shifts Throughout History

The evolution of programming has been marked by major transitions:

- Procedural Programming → Object-Oriented Programming (OOP)

- OOP → Functional Programming

- Sequential → Concurrent Programming

- Traditional → Reactive and Event-Driven Programming

🤖 Artificial Intelligence & Machine Learning

AI and ML technologies are reshaping industries. Growing demand for skills in natural language processing, computer vision, and deep learning.

🛠️ Low-Code/No-Code Development

Democratizing software development, enabling non-technical individuals to create applications. Traditional programming skills remain valuable alongside these platforms.

The Journey Continues

5 Generations

From Machine Code to AI-Driven Languages

Multiple Paradigms

Imperative, Declarative, and Multi-Paradigm

Continuous Evolution

Adapting to New Computing Challenges

Key Takeaways

- Programming languages evolved to make human-computer interaction easier

- Each generation brought higher levels of abstraction

- Modern paradigms focus on both "what" and "how" to solve problems

- Future trends point toward AI integration and democratized development

- Continuous learning and adaptation are essential for developers

What We'll Cover

Why Do Computers Use Binary?

Electronic Switches

ON = 1

OFF = 0

Reliability

Easy to distinguish

between two states

Digital Logic

Perfect for

Boolean operations

Binary Number System

Decimal

Binary

How it works:

Fundamental Data Types in C

char

Size: 1 byte (8 bits)

Range: -128 to 127

Use: Single characters

int

Size: 4 bytes (32 bits)

Range: -2³¹ to 2³¹-1

Use: Whole numbers

float

Size: 4 bytes (32 bits)

Precision: ~7 decimal digits

Use: Decimal numbers

double

Size: 8 bytes (64 bits)

Precision: ~15 decimal digits

Use: High-precision decimals

Memory Organization

Each memory location has an address

Byte

8 bits

Smallest addressable unit

Word

Usually 4 bytes

Size of int on most systems

How Integers are Stored

Positive: 42

Negative: -42

Floating Point Representation

IEEE 754 Standard (32-bit float)

0 = Positive

1 = Negative

Power of 2

(Biased by 127)

Fractional part

(Significant digits)

Character Encoding

Character

ASCII Code

Binary

Number System Conversions

Decimal to Binary

Method: Divide by 2, collect remainders

Example: 13 ÷ 2 = 6 R1

6 ÷ 2 = 3 R0

3 ÷ 2 = 1 R1

1 ÷ 2 = 0 R1

Result: 1101

Binary to Decimal

Method: Sum powers of 2

Example: 1101

1×2³ + 1×2² + 0×2¹ + 1×2⁰

8 + 4 + 0 + 1

Result: 13

Hexadecimal

Base 16: 0-9, A-F

Example: 13₁₀ = D₁₆

Binary: 1101₂ = D₁₆

Use: Compact representation

Octal

Base 8: 0-7

Example: 13₁₀ = 15₈

Binary: 1101₂ = 15₈

Use: Historical importance

Practical Storage Examples

Integer: 42

Memory: 4 bytes

Hex: 0x0000002A

Character: 'C'

ASCII: 67

Memory: 1 byte

Hex: 0x43

String: "Hi"

H: ASCII 72 (0x48)

i: ASCII 105 (0x69)

Memory: 3 bytes (including \\0)

Storage: [72][105][0]

Float: 3.14

IEEE 754:

Sign: 0 (positive)

Exponent: Biased

Mantissa: Fraction bits

Memory: 4 bytes